PAPER

https://arxiv.org/abs/1710.10903

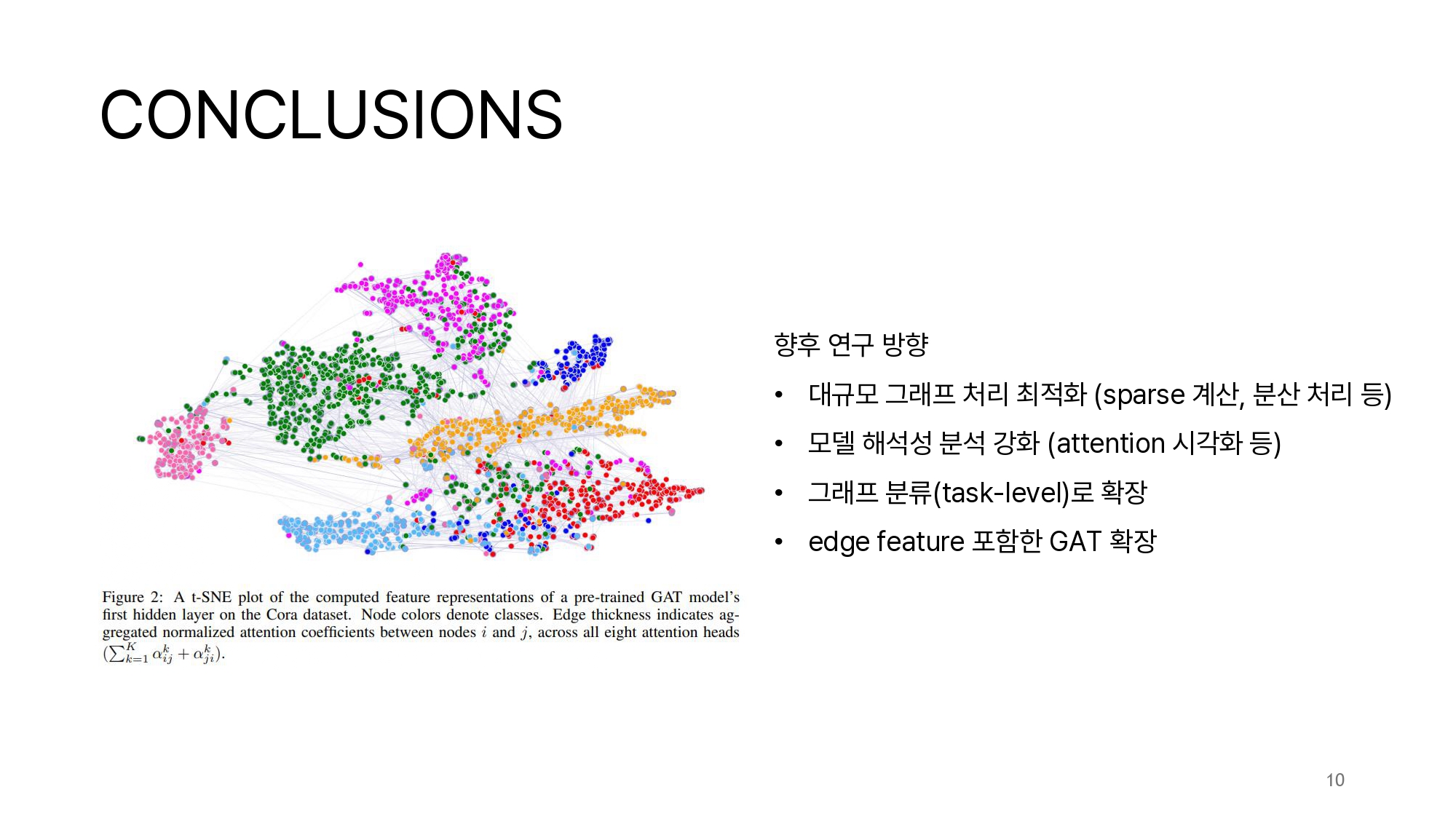

Graph Attention Networks

We present graph attention networks (GATs), novel neural network architectures that operate on graph-structured data, leveraging masked self-attentional layers to address the shortcomings of prior methods based on graph convolutions or their approximations

arxiv.org

Foundational Knowledge for GATs

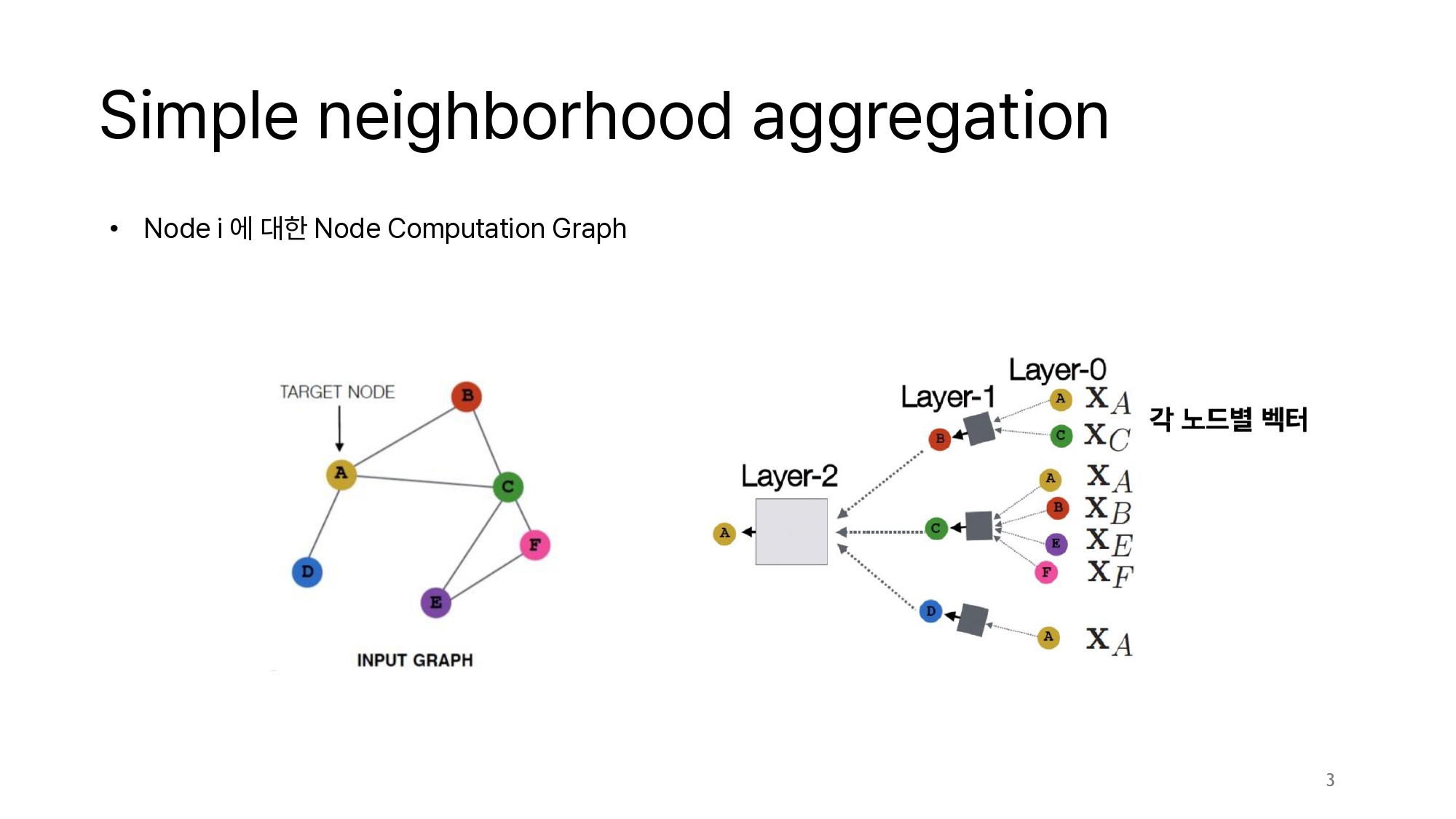

Simple neighborhood aggregation

https://doraemin.tistory.com/246

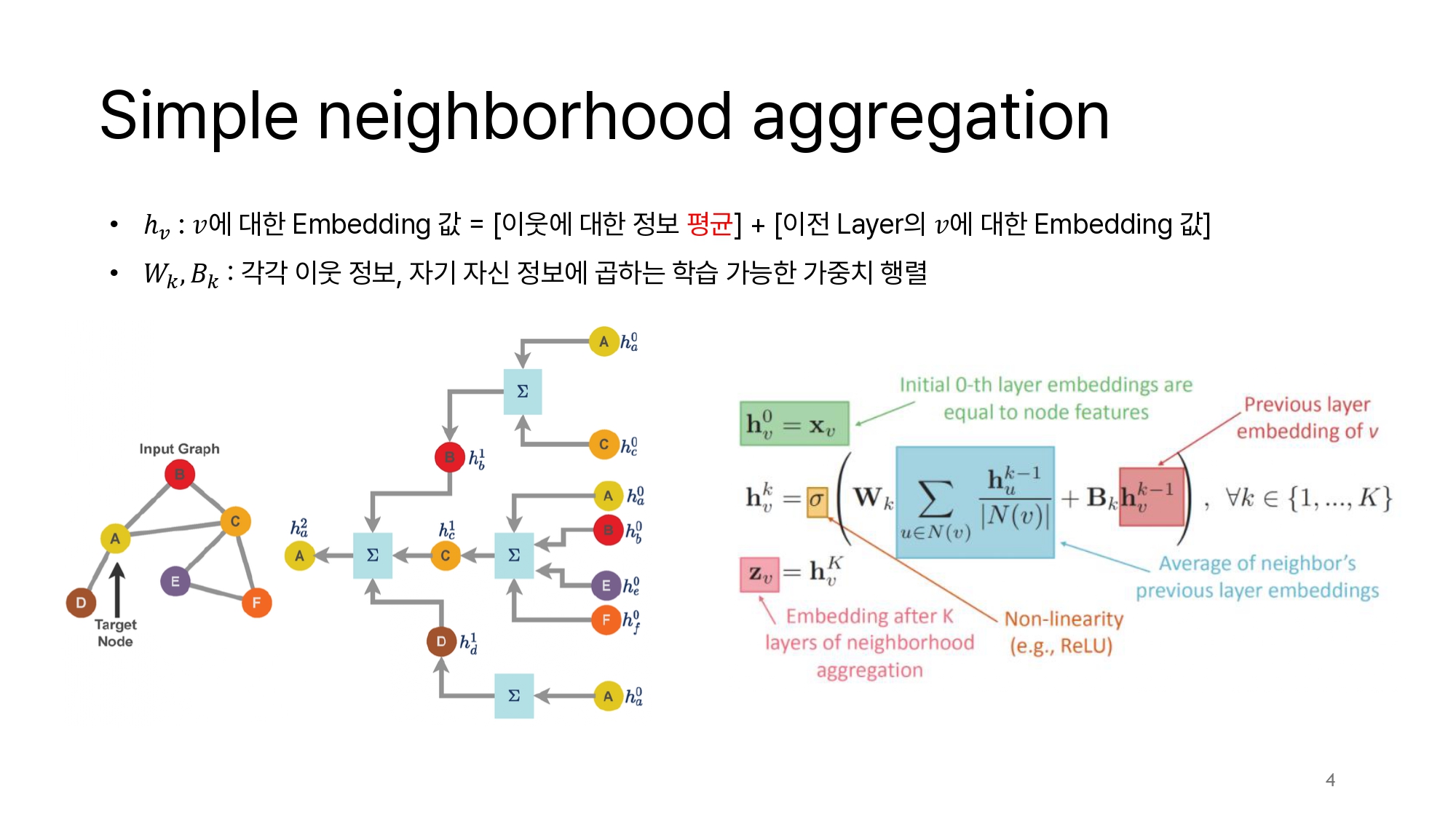

Simple neighborhood aggregation

Node i 에 대한 Node Computation Graph $h_v^k$ : **노드 vv**의 $k$번째 layer의 임베딩 (출력)$h_u^{k-1}$: 이웃 노드 $u$의 이전 layer의 임베딩 (입력)$N(v)$: 노드 $v$의 이웃 집합$W_k, B_k$: 각각 이웃 정보, 자기 자신

doraemin.tistory.com

Graph Attention Network (GAT)

https://doraemin.tistory.com/247

Graph Attention Network (GAT)

Simple neighborhood aggregation 개념을 바탕으로attention을 적용한, Graph Attention Network (GAT) 의 구조를 살펴보자. Simple neighborhood aggregation Simple neighborhood aggregationNode i 에 대한 Node Computation Graph $h_v^k$ : **노

doraemin.tistory.com

[GAT] self-attention

https://doraemin.tistory.com/251

[GAT] self-attention

GAT에서 self-attention이 사용되긴 하지만, Transformer-style의 Key/Query/Value (KQV) 구조와는 다르게 간단화된 형태입니다.하지만 그럼에도 불구하고 우리가 익숙한 K/Q/V 해석 관점에서 대응 관계를 살펴볼

doraemin.tistory.com

PAPER REVIEW SLIDE