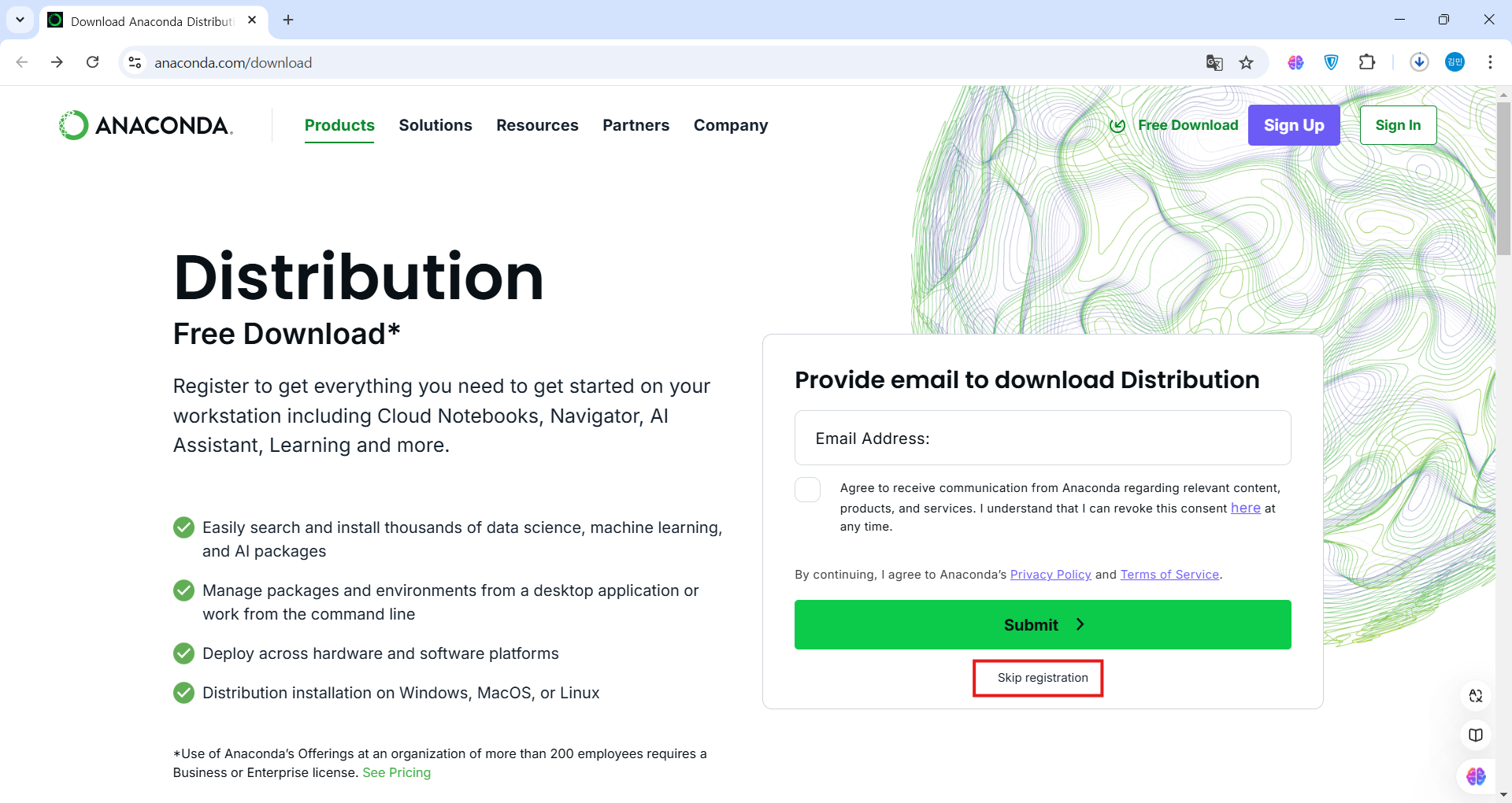

[실습] Anaconda 실습 환경 설정

https://www.anaconda.com/download

Download Anaconda Distribution | Anaconda

Download Anaconda's open-source Distribution today. Discover the easiest way to perform Python/R data science and machine learning on a single machine.

www.anaconda.com

Download 후 기본 설정 값으로 다운해주자.

가상 환경을 만들고 접속하자.

(base) C:\Users\032> conda create -n torch_book python=3.9.0

(base) C:\Users\032> conda env list

# conda environments:

#

# base * C:\Users\032\anaconda3

# torch_book C:\Users\032\anaconda3\envs\torch_book

(base) C:\Users\032> activate torch_book

# (torch_book) C:\Users\032> conda deactivate

# (base) C:\Users\032> conda env remove -n torch_book

# Remove all packages in environment C:\Users\032\anaconda3\envs\torch_book:

PyTorch, Jupyter notebook을 설치하고 실행하자.

https://pytorch.org/

PyTorch

pytorch.org

(torch_book) C:\Users\032> pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

(torch_book) C:\Users\032> pip install jupyter notebook

# pythorch 설치 확인

(torch_book) C:\Users\032> python

Python 3.9.0 (default, Nov 15 2020, 08:30:55) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.cuda.is_available()

True

>>> exit()

(torch_book) C:\Users\032> jupyter notebook

Cuda 버젼 확인 >

https://docs.nvidia.com/cuda/cuda-toolkit-release-notes/index.html

https://ko.wikipedia.org/wiki/CUDA

그래픽 카드 드라이버의 버전은 528.02이고 설치 가능한 최대 CUDA 버전은 12.0입니다.

(base) C:\Users\032>nvidia-smi

Fri Jan 17 10:01:31 2025

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 528.02 Driver Version: 528.02 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name TCC/WDDM | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... WDDM | 00000000:01:00.0 Off | N/A |

| N/A 42C P0 14W / 50W | 0MiB / 4096MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+[실습] PyTorch 기초 문법

pip install requests

import torchvision.transforms as transforms

mnist_transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,) , (1.0,)) # 평균이 0.5, 표준편차가 1.0

])

from torchvision.datasets import MNIST

import requests

download_root = r'C:\Users\032\SKT_AI'

train_dataset = MNIST(download_root, transform=mnist_transform, train=True, download=True)

valid_dataset = MNIST(download_root, transform=mnist_transform, train=False,download=True)

test_dataset = MNIST(download_root, transform=mnist_transform, train=False, download=True)

import torch.nn as nn

model = nn.Linear(in_features=1, out_features=1, bias=True)

class MLP(Module):

def __init__(self, inputs):

super(MLP, self).__init__()

self.layer = Linear(inputs,1)

self.activation = Sigmoid()

def forward(self,X):

X = self.layer(X)

X = self.activation(X)

return X

import torch.nn as nn

class MLP(nn.Module):

def __init__(self):

super(MLP,self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=64, kernel_size=5),

nn.ReLU(inplace=True),

nn.MaxPool2d(2))

self.layer2 = nn.Sequential(

nn.Conv2d(in_channels=64, out_channels=30, kernel_size=5),

nn.ReLU(inplace=True),

nn.MaxPool2d(2))

self.layer3 = nn.Sequential(

nn.Linear(in_features=30*5*5, out_features=10, bias=True),

nn.ReLU(inplace=True))

def forward(self,x):

x=self.layer1(x)

x=self.layer2(x)

x=x.view(x.shape[0], -1)

x=self.layer3(x)

return x

model = MLP()

print("Printing childrne\n-----------")

print(list(model.children()))

print("\n\n Printing Modules \n------")

print(list(model.modules()))

pip install torchmetrics

import torch

import torchmetrics

# 랜덤한 예측값과 타겟 생성

preds = torch.randint(5, (10,)) # 클래스 0~4

target = torch.randint(5, (10,)) # 클래스 0~4

# 정확도 계산 (멀티클래스)

acc = torchmetrics.functional.accuracy(preds, target, task="multiclass", num_classes=5)

print(f"Accuracy: {acc:.4f}")

# Accuracy: 0.4000

# 라이브러리 호출

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from torchvision.datasets import MNIST

from torch.utils.data import DataLoader

# 하이퍼파라미터 세팅

batch_size = 64

epoch = 10

lr = 0.001

# 데이터 전처리 정의

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

# 데이터 로딩

download_root = '../chap02/data/MNIST_DATASET'

train_dataset = MNIST(download_root, transform=transform, train=True, download=True)

valid_dataset = MNIST(download_root, transform=transform, train=False, download=True)

test_dataset = MNIST(download_root, transform=transform, train=False, download=True)

train_loader = DataLoader(dataset=train_dataset, batch_size=batch_size, shuffle=True)

valid_loader = DataLoader(dataset=valid_dataset, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(dataset=test_dataset, batch_size=batch_size, shuffle=True)

# 모델 정의

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

# 레이어 정의

self.fc1 = nn.Linear(28 * 28, 128)

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

x = x.view(-1, 28 * 28) # 2차원 -> 1차원 형태로 변환

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

x = self.fc3(x)

return x

model = SimpleNN()

# 옵티마이저와 손실 함수 정의

loss_fn = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=lr)

# 모델 학습

for i in range(epoch):

for images, labels in train_loader:

optimizer.zero_grad() # 그라디언트 초기화

preds = model(images) # 모델 예측

loss = loss_fn(preds, labels) # 손실 계산

loss.backward() # 역전파

optimizer.step() # 가중치 업데이트

# 1 epoch 완료

print(f'[Epoch]| [{i + 1}/{epoch}], [Loss]| {loss.item():.4f}')

# [Epoch]| [1/10], [Loss]| 0.3773

# [Epoch]| [2/10], [Loss]| 0.0620

# [Epoch]| [3/10], [Loss]| 0.0596

# [Epoch]| [4/10], [Loss]| 0.0694

# [Epoch]| [5/10], [Loss]| 0.0087

# [Epoch]| [6/10], [Loss]| 0.0036

# [Epoch]| [7/10], [Loss]| 0.0704

# [Epoch]| [8/10], [Loss]| 0.0185

# [Epoch]| [9/10], [Loss]| 0.0634

# [Epoch]| [10/10], [Loss]| 0.0047# model valid

model.eval() # 모델 eval 시작 = 파라미터 업데이트 X

total = 0

correct = 0

with torch.no_grad(): # 기울기 계산 비활성화

for images, labels in test_loader:

preds = model(images) # 모델 예측

_, predicted = torch.max(preds.data, 1)

total += labels.size(0) # 전체 샘플 수

correct += (predicted == labels).sum().item()

accuracy = (correct / total) * 100

# 정확도 계산

print(f'[Accuracy of the model on test dataset]: {accuracy:.2f}')

# [Accuracy of the model on test dataset]: 97.45'Development Setup' 카테고리의 다른 글

| [AWS] EC2 인스턴스 생성 및 배포 (0) | 2025.02.27 |

|---|---|

| [vllm] 설치하기 (Linux 환경을 위해, WSL 2 환경에서 설치) (0) | 2025.02.24 |

| [Scanpy] 설치 및 실행 (2) | 2024.12.14 |

| conda 가상환경 생성 및 R 실행 (3) | 2024.11.28 |

| [Linux] Hard Link vs. Soft Link (2) | 2024.11.26 |